🤖 Fully Robotic Human-Form Agents: Autonomous Replacements and Collaborators in Military, Policing, Rescue and Firefighting

🤖 Fully Robotic Human-Form Agents: Autonomous Replacements and Collaborators in Military, Policing, Rescue, and Firefighting

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

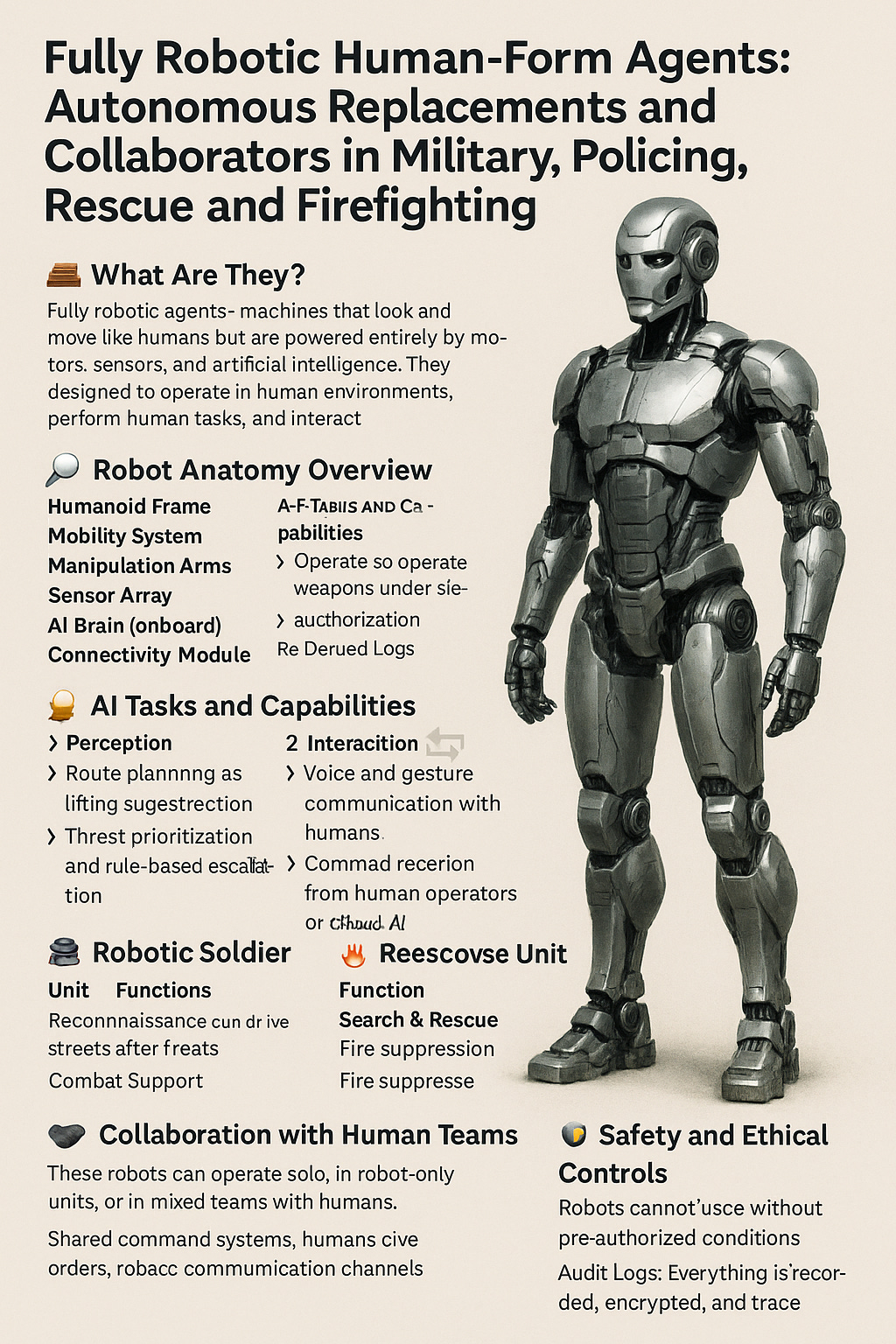

🔍 What Are They?

These are fully robotic agents—machines that look and move like humans but are powered entirely by motors, sensors, and artificial intelligence. They are designed to operate in human environments, perform human tasks, and interact with people or machines as part of coordinated missions.

They are not controlled by humans wearing suits or operating remote joysticks—they walk, think, and act using onboard AI and embedded logic.

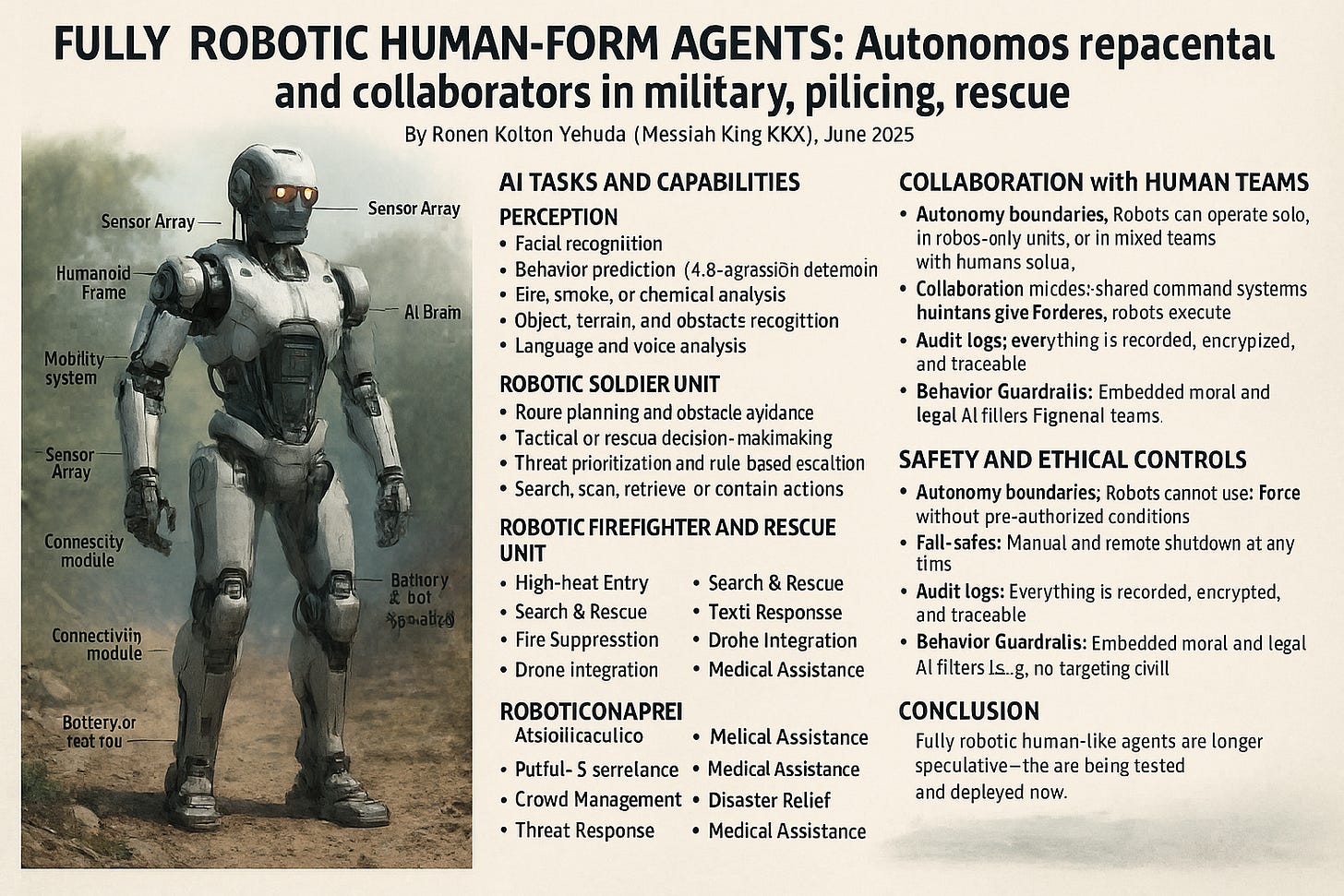

🧱 Robot Anatomy Overview

Subsystem Description

Humanoid Frame Human-sized robot body with legs, arms, head, and hands, allowing it to move through doors, stairs, and vehicles

Mobility System Bipedal legs with gyroscopic balancing, or hybrid legs/wheels for agility

Manipulation Arms Dexterous arms for lifting, carrying, opening doors, using tools, or non-lethal weapons

Sensor Array 360° vision, thermal imaging, LIDAR, radar, microphones, gas detection, biometric scanning

AI Brain (onboard) Decision-making system for perception, planning, navigation, communication, and task execution

Connectivity Module Wi-Fi, 5G, or mesh network link to human teams or cloud command systems

Power Supply Battery or fuel cell providing 3–8 hours of operation; swap or recharge as needed

🧠 AI Tasks and Capabilities

Fully robotic agents operate independently within assigned protocols. Their AI handles:

1. Perception

Facial recognition

Behavior prediction (e.g., aggression detection in riots)

Fire, smoke, or chemical analysis

Object, terrain, and obstacle recognition

Language and voice analysis

2. Action Planning

Route planning and obstacle avoidance

Tactical or rescue decision-making

Threat prioritization and rule-based escalation

Search, scan, retrieve, or contain actions

3. Interaction

Voice and gesture communication with humans

Warning systems, negotiation, public announcements

Command reception from human operators or cloud AI

🔫 Robotic Soldier Unit

Function Capability

Reconnaissance Scout dangerous zones before human entry

Combat Support Carry and operate weapons under strict authorization

Rescue & Extraction Retrieve wounded soldiers under fire

Target Coordination Laser marking and drone sync

Stealth Movement Navigate buildings, forests, or battlefields autonomously

🚓 Robotic Police Unit

Function Capability

Patrol & Surveillance Walk or drive streets with live camera and behavioral monitoring

Crowd Management Predict movement, provide verbal warnings, deploy barriers or foam

Threat Response Use non-lethal tools (tasers, nets, drones) to stop violence

Identification Face and ID scan to verify individuals or detect wanted suspects

Evidence Recording Store encrypted audio-video logs for court and auditing

🔥 Robotic Firefighter and Rescue Unit

Function Capability

High-Heat Entry Enter burning or collapsing buildings safely

Search & Rescue Detect victims using thermal imaging and heartbeat sensors

Fire Suppression Operate hoses, nozzles, or extinguishers from within danger zones

Toxic Response Identify and isolate gas leaks, radiation, or biohazards

Drone Integration Guide aerial or crawler drones into tight or high-risk zones

Disaster Relief Lift debris, distribute supplies, and coordinate evacuations

Medical Assistance Stabilize and carry injured civilians from danger zones

🤝 Collaboration with Human Teams

These robots can operate solo, in robot-only units, or in mixed teams with humans. Collaboration includes:

Shared command systems (humans give orders, robots execute)

Autonomous zones (robot covers area where humans can’t go)

Robots handle danger, humans make decisions

Secure communication channels (AI translates and syncs data in real time)

🛡️ Safety and Ethical Controls

Autonomy Boundaries: Robots cannot use force without pre-authorized conditions

Fail-safes: Manual and remote shutdown at any time

Audit Logs: Everything is recorded, encrypted, and traceable

Behavior Guardrails: Embedded moral and legal AI filters (e.g., no targeting civilians)

🏗️ Current Real-World Examples

China: Advanced humanoid robots for industrial and military trials (Fourier Intelligence, Unitree Robotics)

USA: Tesla Optimus, Boston Dynamics Atlas, and Ghost Robotics for defense and emergency tasks

South Korea, Japan: Firefighting and rescue robots in urban environments

Israel and Europe: Public security and disaster response robots in municipal trials

🧭 Conclusion

Fully robotic human-like agents are no longer speculative—they are being tested and deployed now. They will soon:

Replace humans in the most dangerous roles

Collaborate in rescue and security missions

Operate 24/7 without fatigue or fear

Sync seamlessly with smart cities, drones, and human teams

They are not just machines—they are autonomous co-workers in human-shaped form, engineered to act when humans cannot.

🤖 Fully Robotic Human-Form Agents: Autonomous Replacements and Collaborators in Military, Policing, and Firefighting

🔍 What Are They?

These are fully robotic agents—machines that look and move like humans but are powered entirely by motors, sensors, and artificial intelligence. They are designed to operate in human environments, perform human tasks, and interact with people or machines as part of coordinated missions.

They are not controlled by humans wearing suits or operating remote joysticks—they walk, think, and act using onboard AI and embedded logic.

🧱 Robot Anatomy Overview

Subsystem Description

Humanoid Frame Human-sized robot body with legs, arms, head, and hands, allowing it to move through doors, stairs, and vehicles

Mobility System Bipedal legs with gyroscopic balancing, or hybrid legs/wheels for agility

Manipulation Arms Dexterous arms for lifting, carrying, opening doors, using tools, or non-lethal weapons

Sensor Array 360° vision, thermal imaging, LIDAR, radar, microphones, gas detection, biometric scanning

AI Brain (onboard) Decision-making system for perception, planning, navigation, communication, and task execution

Connectivity Module Wi-Fi, 5G, or mesh network link to human teams or cloud command systems

Power Supply Battery or fuel cell providing 3–8 hours of operation; swap or recharge as needed

🧠 AI Tasks and Capabilities

Fully robotic agents operate independently within assigned protocols. Their AI handles:

1. Perception

Facial recognition

Behavior prediction (e.g., aggression detection in riots)

Fire, smoke, or chemical analysis

Object, terrain, and obstacle recognition

Language and voice analysis

2. Action Planning

Route planning and obstacle avoidance

Tactical or rescue decision-making

Threat prioritization and rule-based escalation

Search, scan, retrieve, or contain actions

3. Interaction

Voice and gesture communication with humans

Warning systems, negotiation, public announcements

Command reception from human operators or cloud AI

🔫 Robotic Soldier Unit

Function Capability

Reconnaissance Scout dangerous zones before human entry

Combat Support Carry and operate weapons under strict authorization

Rescue & Extraction Retrieve wounded soldiers under fire

Target Coordination Laser marking and drone sync

Stealth Movement Navigate buildings, forests, or battlefields autonomously

🚓 Robotic Police Unit

Function Capability

Patrol & Surveillance Walk or drive streets with live camera and behavioral monitoring

Crowd Management Predict movement, provide verbal warnings, deploy barriers or foam

Threat Response Use non-lethal tools (tasers, nets, drones) to stop violence

Identification Face and ID scan to verify individuals or detect wanted suspects

Evidence Recording Store encrypted audio-video logs for court and auditing

🔥 Robotic Firefighter Unit

Function Capability High-Heat Entry Enter burning or collapsing buildings safely Search & Rescue Detect victims using thermal imaging and heartbeat sensors Fire Suppression Operate hoses, nozzles, or extinguishers from within danger zones Toxic Response Identify and isolate gas leaks, radiation, or biohazards Drone Integration Guide aerial or crawler drones into tight or high-risk zones

🤝 Collaboration with Human Teams

These robots can operate solo, in robot-only units, or in mixed teams with humans. Collaboration includes:

Shared command systems (humans give orders, robots execute)

Autonomous zones (robot covers area where humans can’t go)

Robots handle danger, humans make decisions

Secure communication channels (AI translates and syncs data in real time)

🛡️ Safety and Ethical Controls

Autonomy Boundaries: Robots cannot use force without pre-authorized conditions

Fail-safes: Manual and remote shutdown at any time

Audit Logs: Everything is recorded, encrypted, and traceable

Behavior Guardrails: Embedded moral and legal AI filters (e.g., no targeting civilians)

🏗️ Current Real-World Examples

China: Advanced humanoid robots for industrial and military trials (Fourier Intelligence, Unitree Robotics)

USA: Tesla Optimus, Boston Dynamics Atlas, and Ghost Robotics for defense and emergency tasks

South Korea, Japan: Firefighting and rescue robots in urban environments

🧭 Conclusion

Fully robotic human-like agents are no longer speculative—they are being tested and deployed now. They will soon:

Replace humans in the most dangerous roles

Collaborate in rescue and security missions

Operate 24/7 without fatigue or fear

Sync seamlessly with smart cities, drones, and human teams

They are not just machines—they are autonomous co-workers in human-shaped form, engineered to act when humans cannot.

Here is a technical article focused specifically on Fully Robotic Human-Form Agents designed to replace or collaborate with humans in military, law enforcement, and firefighting roles:

Technical Framework for Fully Robotic Human-Form Agents in Military, Police, and Fire Response Operations

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Abstract

This article outlines the design architecture, functional modules, AI capabilities, operational logic, and deployment protocols of fully autonomous or semi-autonomous humanoid robotic agents engineered to replace or collaborate with human personnel in high-risk, high-stress frontline environments. Unlike wearable augmentations, these robots are independent actors equipped with multi-modal perception systems, mobile manipulation, mission-specific AI frameworks, and real-time communication interfaces.

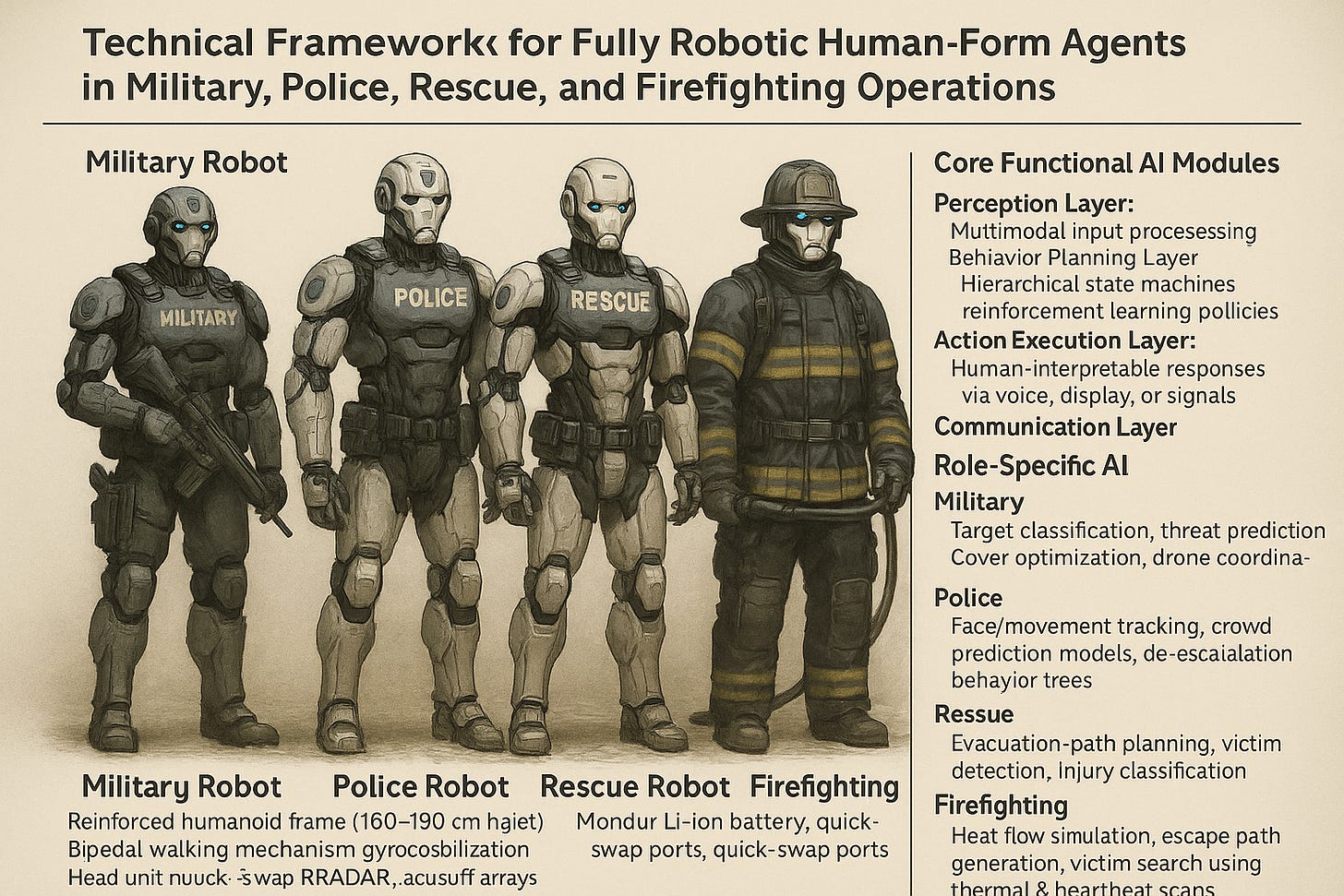

1. System Architecture

1.1 Mechanical Structure

Component Description

Chassis Reinforced humanoid frame (160–190 cm height) constructed from carbon-titanium alloy

Mobility Unit Bipedal walking mechanism with gyroscopic stabilization; some variants include retractable wheels

Manipulator Arms Dual-arm setup with 6–8 DOF, force feedback, and interchangeable tool interfaces

Head Unit Sensor housing for vision, audio, radar, and environmental detection

Protective Shell Rated for thermal (up to 1200°C), ballistic, and chemical resistance, based on mission profile

Power Supply Modular Li-ion or solid-state battery with quick-swap ports; 3–8 hours runtime per module

2. Perception & Sensor Fusion

Sensor Type Role

RGB + IR Cameras Visual scene processing, object/person recognition, fire detection

LIDAR & RADAR 3D mapping, SLAM, obstacle detection in low-visibility environments

Acoustic Arrays Gunshot detection, voice command parsing, distress signal identification

Thermal Sensors Human presence detection in smoke, fog, or darkness

Gas/Chemical Sensors Hazard identification (CO, CH₄, SO₂, etc.) in firefighting or industrial settings

IMU + GPS Localization, orientation, fall correction, motion prediction

All sensor data is processed via onboard edge-AI and optionally synced with command cloud systems.

3. AI Task Management System

3.1 Core Functional AI Modules

Perception Layer: Multimodal input processing for objects, terrain, humans, hazards

Behavior Planning Layer: Hierarchical state machines and reinforcement learning policies

Action Execution Layer: Real-time actuation control with feedback loops

Communication Layer: Human-interpretable responses via voice, display, or signals

3.2 Role-Specific AI

Agent Role AI Capabilities

Military Target classification, threat prediction, cover optimization, drone coordination

Police Face/movement tracking, crowd prediction models, de-escalation behavior trees

Firefighting Heat flow simulation, escape path generation, victim search using thermal & heartbeat scans

4. Autonomy and Safety Logic

4.1 Autonomy Levels

Level 1: Human teleoperation

Level 2: Assistive autonomy (suggestions only)

Level 3: Conditional autonomy with human override

Level 4: Full autonomy with ethical constraints and override capability

4.2 Safety Protocols

Fail-Safe: If systems overheat, disconnect, or misclassify input, robot halts automatically

Ethical Guardrails: Asimov-style constraints prevent targeting humans unless authorized under law

Audit Trail: Encrypted action logging for post-operation review or legal validation

Override Interface: Mobile, voice, or command-center-based manual override control

5. Manipulation & Task Execution

Function Capabilities

Mobility Walk, jog, climb stairs/ladders, cross debris, enter vehicles

Manual Tasks Lift, carry (up to 200 kg), open/close doors, operate hoses or weapons

Tool Use Attachments: Fire hose, drill, shield, non-lethal weapon, camera, stretcher arm

Victim Handling Securely lift and carry humans with soft-mode adaptive grip

6. Communications and Coordination

Network Stack: 5G, Wi-Fi 6, and short-range mesh protocols

Voice Interaction: Bidirectional speech with NLP and multi-language models

Inter-Agent Sync: Robotic units share maps, targets, and hazard data in real-time

Cloud Control: Remote operation or monitoring via secure cloud dashboards

7. Applications and Deployment Scenarios

7.1 Combat Operations

Urban and rural reconnaissance

Direct combat support under controlled engagement logic

Retrieval of injured personnel under fire

Mobile surveillance and perimeter security

7.2 Law Enforcement

Riot control (non-lethal enforcement with compliance AI)

Patrol and identification (facial recognition + behavior flagging)

Hostage situations (first contact, negotiation assistant)

Event security (visual scan, tracking, perimeter alerts)

7.3 Fire and Emergency Response

Entry into structures exceeding human survivability thresholds

Victim location via thermal and heartbeat sensors

Structural analysis and safe zone designation

On-site firefighting with mounted or carried extinguishers

8. Maintenance & Reliability

Subsystem Maintenance Interval Notes

Joints/Actuators 500 hrs Replaceable with quick-lock cartridges

Batteries 1000 cycles Hot-swappable; redundancy slots included

Sensors Self-calibrating Auto-check during startup

AI Core Weekly OTA updates Includes logic patches and ethical constraints

9. Compliance and Ethics

Designed in adherence to IEEE P7000, ISO/TC 299, and UN Lethal Autonomous Weapons Protocols

Real-time transparency features (camera feed mirrors, public mode lights)

Configurable Rules of Engagement (RoE) logic profiles per jurisdiction

Optional identity masking (e.g., no human face display) to avoid uncanny valley and maintain trust

10. Conclusion

Fully robotic human-form agents represent a converging line between humanoid robotics, high-level AI autonomy, and mission-specific logic frameworks. Their success in military, police, and emergency response domains hinges on a balance of:

Autonomous capability

Legal compliance

Mission specialization

Human compatibility

These agents will not only replace humans in high-risk roles, but also extend the reach and speed of coordinated responses in dynamic and hazardous environments.

Here is a regular article for general publication, clearly explaining fully robotic human-shaped agents used in military, police, and firefighting roles, designed to replace or work alongside humans:

🤖 Fully Robotic Agents: The Human-Shaped Robots Protecting Our Streets, Cities, and Soldiers

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

The world is changing fast—and with it, the way we protect lives. In place of only human boots on the ground, a new kind of protector is emerging: fully robotic agents that walk, talk, and act like humans—but are machines powered by advanced artificial intelligence.

These robots aren’t sci-fi dreams. They already exist. In countries like China, the United States, and South Korea, humanoid robots are being tested to fight fires, assist police, and serve in military missions. Unlike wearable suits or remote drones, these robots are fully autonomous—they can move, see, think, and act on their own.

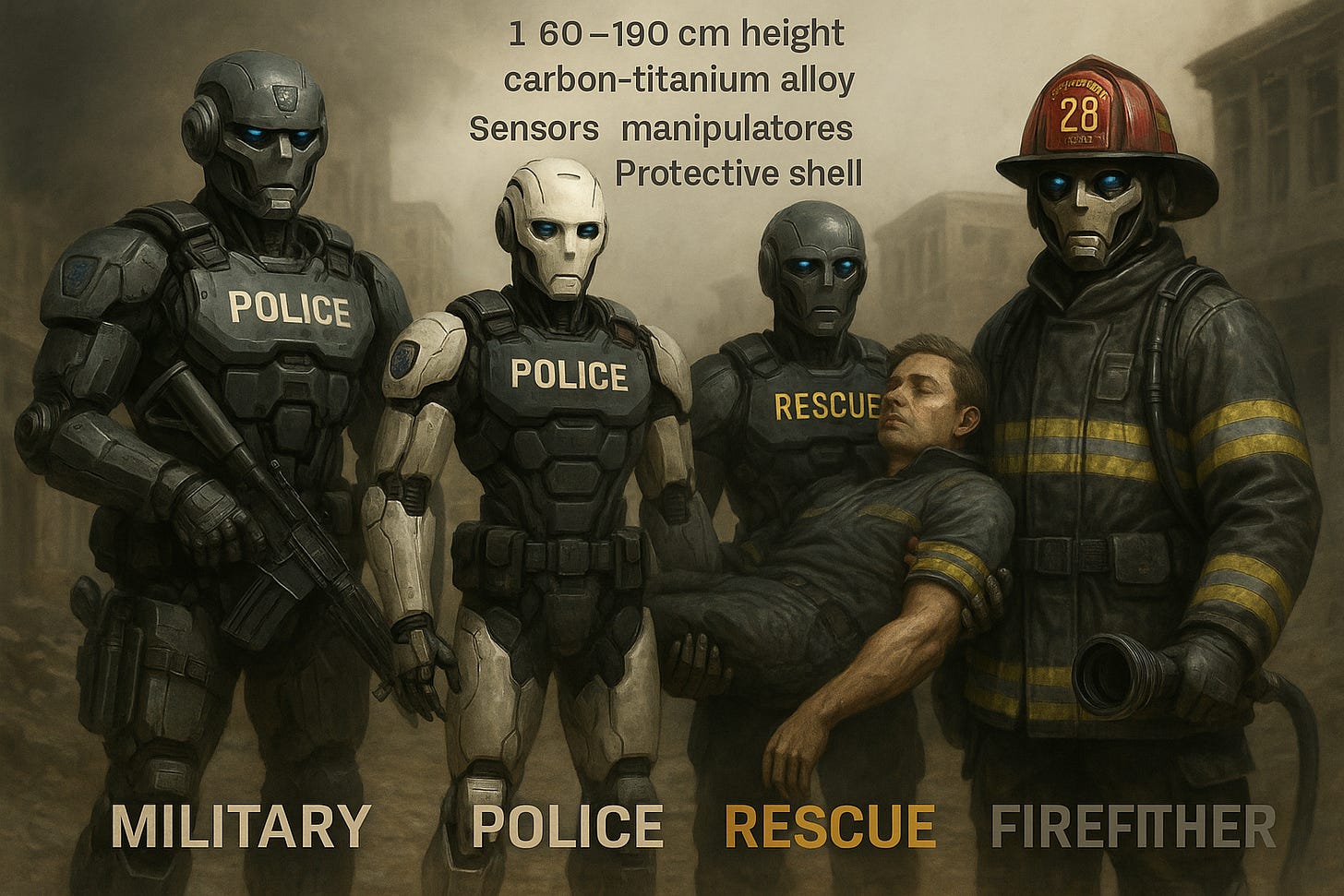

🤖 What Are Human-Shaped Robotic Agents?

They’re robots that look and move like people. Built with legs, arms, eyes (cameras), and ears (microphones), they are designed to work in the same buildings, vehicles, and streets we do.

Each robot is built with:

A human-sized frame (about 5 to 6 feet tall)

Bipedal walking ability (can walk or run on two legs)

Sensors to see in darkness, hear commands, feel temperature, detect gas leaks, or sense danger

AI software to decide what to do—just like a trained professional

Communication systems to talk with humans and other robots

🛡️ How They Replace or Support Humans

⚔️ Military Robots: Robotic Soldiers

Robotic soldiers can:

Scout dangerous zones before troops arrive

Carry heavy weapons and equipment

Help injured soldiers out of combat

Track enemy movements using AI

Defend perimeters day and night

They follow strict rules and can only use force under human-authorized conditions.

🚓 Police Robots: Law Enforcement Without Risk

In cities, police robots help reduce danger for human officers. They can:

Patrol streets and scan crowds for threats

Use facial recognition to identify suspects

Speak with people and give commands

Subdue violent attackers using non-lethal tools (like tasers or nets)

Record everything they see and hear for legal evidence

Police robots are designed to talk calmly, follow orders, and avoid violence unless absolutely necessary.

🔥 Firefighting Robots: Going Where Humans Can’t

Firefighting robots are some of the most heroic of all. They can:

Walk into burning buildings without getting tired or injured

See through smoke using thermal vision

Search for people trapped under rubble or in toxic environments

Spray water, carry hoses, or move debris

Coordinate with drones and human firefighters

These machines go where it’s too dangerous for any human, especially in chemical spills, wildfires, or collapsed buildings.

🧠 What the AI Actually Does

The brains of these robots are built from AI systems trained on millions of real-world situations. Their job is to:

Understand where they are (navigation)

Identify people, objects, or hazards

Decide the best action in complex environments

Communicate with humans and other robots

Follow ethical rules and mission instructions

If anything seems unsafe or unclear, the robot either stops immediately or asks for human instructions.

🤝 Humans and Robots: Working as a Team

These robots are not meant to take over everything. Instead, they:

Replace humans in dangerous environments

Work alongside humans in shared missions

Report back to human commanders who stay in control

For example:

In a riot, a robot can block a street while human officers handle the crowd

In a fire, the robot can search upstairs while humans rescue from below

In war, robots can guard the perimeter while humans manage strategy

🌍 Already in Use

Countries developing and testing these robots include:

China – humanoid industrial and tactical robots (Fourier, Unitree)

USA – defense robotics from Tesla Optimus, Boston Dynamics, Ghost Robotics

Japan/South Korea – rescue robots, smart firefighter units

Israel and Europe – security robots for airports, stadiums, and critical infrastructure

🔐 Are They Safe?

Yes—if designed correctly. Every robot includes:

Manual override systems (can be shut down remotely)

Ethical programming (no unauthorized violence or privacy violations)

Encrypted data logging (to review any mistake or misuse)

Strict legal boundaries (customized by country or city)

Robots do not "decide" to act on their own outside of instructions. They follow the rules they are given.

🔚 Conclusion

Fully robotic human-shaped agents are no longer the future—they are today’s new frontline. From military zones to burning buildings, from quiet patrols to explosive emergencies, these robots will save lives by going where humans can’t—or shouldn’t.

They’re not here to replace humanity. They’re here to protect it.

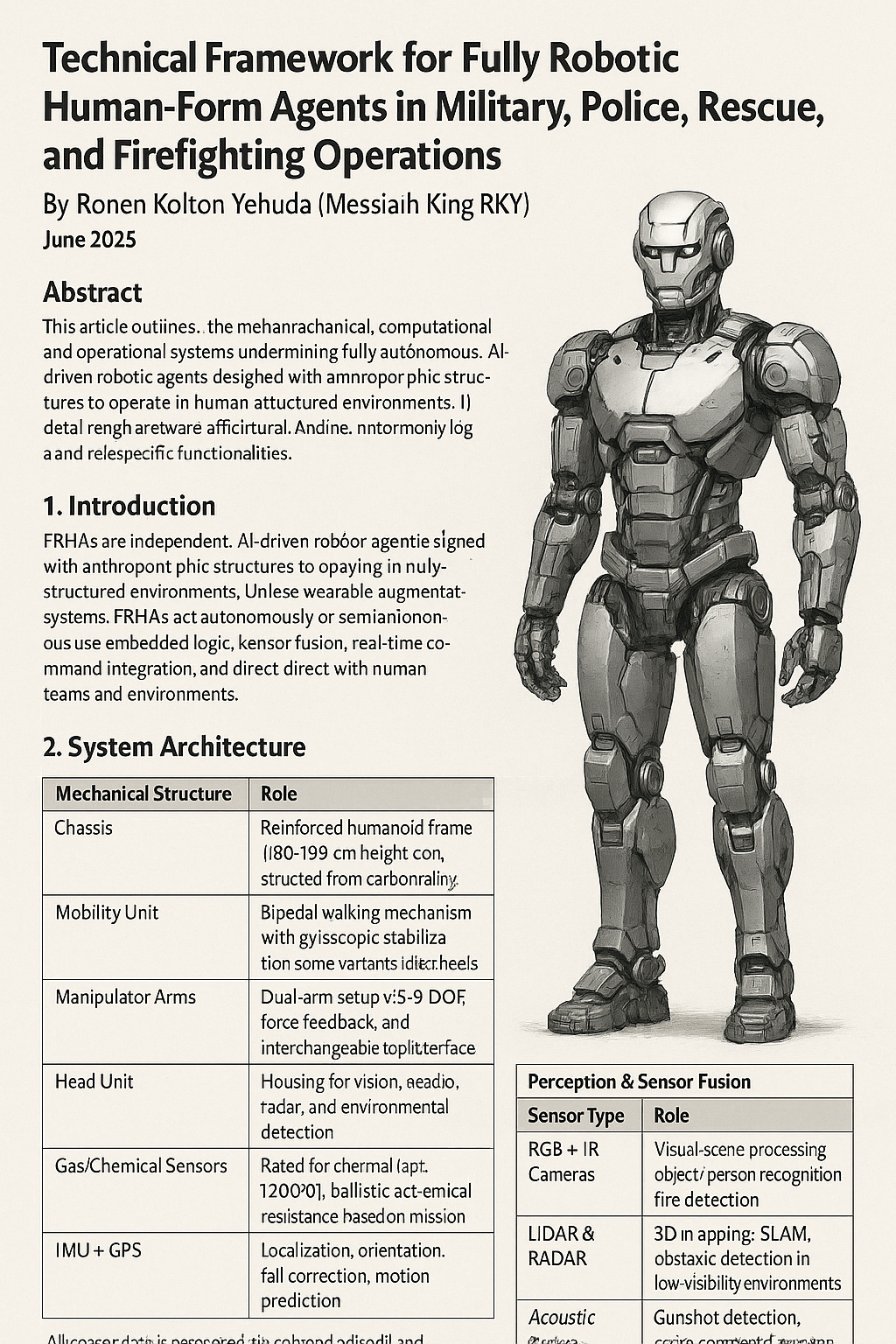

Technical Framework for Fully Robotic Human-Form Agents in Military, Police, Rescue, and Firefighting Operations

By Ronen Kolton Yehuda (Messiah King RKY), June 2025

Abstract

This technical framework outlines the mechanical, computational, and operational systems underpinning fully robotic human-form agents (FRHAs) designed to replace or collaborate with human personnel in military, law enforcement, rescue, and firefighting. It details the hardware architecture, AI control layers, autonomy logic, and role-specific functionalities required for safe and effective deployment.

Introduction

FRHAs are independent, AI-driven robotic agents designed with anthropomorphic structures to operate in human-structured environments. Unlike wearable augmentation systems, FRHAs act autonomously or semi-autonomously using embedded logic, sensor fusion, and real-time command integration. Their form factor allows them to traverse staircases, enter vehicles, manipulate tools, and interact directly with human teams and environments.System Architecture

2.1 Mechanical Composition

Component | Description

--- | ---

Frame | Carbon-titanium composite humanoid chassis (160–190 cm)

Mobility System | Bipedal locomotion with gyroscopic stabilization and optional retractable wheels

Manipulator Arms | Dual 6–8 DOF arms with adaptive gripping, tool slots, and haptic feedback

Protective Shell | Ballistic, fire, and chemical-resistant plating tailored to role

Power Unit | Modular Li-ion or hydrogen fuel cells (3–8 hour runtime)

2.2 Sensor Suite

Sensor Function Vision RGB, IR, and thermal cameras for perception, tracking, and fire/smoke detection LIDAR & RADAR 3D environment mapping, obstacle avoidance, and SLAM Acoustic Arrays Gunshot localization, voice command parsing Gas/Chemical Sensors Hazard detection (e.g., CO, ammonia, radiation) IMU + GPS Internal orientation, navigation, and fall correction

AI and Decision Framework

3.1 Core AI Modules

Perception Layer: Real-time multimodal sensor processing

Behavior Layer: Finite state machines and reinforcement learning agents

Planning Layer: Path planning, risk analysis, task prioritization

Communication Layer: Voice response generation, gesture understanding, network sync

3.2 Role-Based AI Extension

Role Specific AI Functions Military Target ID, tactical modeling, engagement logic, drone swarming Police Facial recognition, behavioral threat prediction, non-lethal force deployment Rescue Victim localization via thermal/heartbeat scan, debris analysis, triage coordination Firefighting Heat flow modeling, collapse prediction, autonomous hose control

Autonomy and Safety

Autonomy Level | Description

--- | ---

L1 | Manual remote operation

L2 | Assisted decision support

L3 | Conditional autonomy with override

L4 | Full mission execution with ethical rule constraints

Safety Features:

Multi-tiered override systems (manual, remote, biometric)

Ethical constraint enforcement via hardcoded logic (Asimov-like laws)

Encrypted event logging for post-mission audit

Communication & Network

Dual-channel comms: 5G/Wi-Fi mesh + fallback LoRa protocols

Encrypted peer-to-peer data sync among FRHAs

Real-time dashboard access for commanders

Vocal/gesture-based human-robot coordination

Role-Specific Capabilities

6.1 Military Deployment

Combat support in high-risk zones

Enemy tracking and designation

Extraction of wounded personnel

6.2 Policing Applications

Patrol in urban environments

Riot control with AI-powered escalation logic

Evidence logging with GDPR compliance

6.3 Rescue Operations

Search and rescue in collapsed structures or disaster zones

Emergency triage stabilization

Autonomous victim evacuation

6.4 Firefighting

Entry into high-heat and chemical environments

Aerial/ground drone coordination

Fire suppression tool management

Maintenance and Upgrades

Component | Cycle | Notes

--- | --- | ---

Joints/Actuators | 500 hrs | Swappable cartridges

Power Units | 1000 cycles | Hot-swappable

Sensors | Self-diagnosing | Replace if >5% calibration drift

AI Core | OTA updates weekly | Ethics, logic, navigation enhancementsCompliance and Legal Considerations

IEEE P7000 and ISO/TC 299 adherence

Lethal Autonomous Weapons Protocol-compliant

Regional rules of engagement logic profiles

Real-time transparency toggles for public deployments

Deployment Strategy

Phase | Description

--- | ---

I | Testing in elite military/police/rescue units

II | Expansion to civil emergency and municipal use

III | Joint deployment with human operators in live zones

IV | Standardization, regulation, international interoperabilityConclusion

FRHAs represent the convergence of humanoid robotics, embedded AI, and mission-specific automation for real-world frontline operations. Their architecture enables them to replace or assist humans in extreme environments without compromising on ethics, control, or situational intelligence. These agents are not just tools—they are operational platforms.

End of Document

Legal Statement for Intellectual Property and Collaboration

Author: Ronen Kolton Yehuda (MKR: Messiah King RKY)

The concept, structure, and written formulation of the Fully Robotic Human-Form Agents (FRHAs) — including their military, police, rescue, and firefighting configurations — are the original innovation and intellectual property of Ronen Kolton Yehuda (MKR: Messiah King RKY).

This statement affirms authorship and creative development of the FRHA system as a unified humanoid robotic framework integrating autonomous AI logic, modular mechanical architecture, sensor fusion, and ethical safety constraints for professional and emergency operations.

The author does not claim ownership over general robotic engineering or scientific principles, but solely over the original invention, system design logic, terminology, and written expression presented in this work.

Any reproduction, adaptation, modification, or commercial development of this invention or its documentation requires the author’s prior written approval.

Academic and journalistic references are permitted when proper credit is given.

The author welcomes lawful collaboration, licensing, and partnership proposals, provided that intellectual property rights, authorship acknowledgment, and ethical technology standards are fully respected.

All rights reserved internationally.

Published by MKR: Messiah King RKY (Ronen Kolton Yehuda)

📘 Blogs:

Also for rescue