The Rise of AI Lie Detection: How Machines Are Learning to Spot the Truth

The Rise of AI Lie Detection: How Machines Are Learning to Spot the Truth

By Ronen Kolton Yehuda (Messiah King RKY)

Can a Machine Tell If You're Lying?

For decades, lie detection has relied on a familiar tool: the polygraph. You’ve seen it in movies — a person strapped to sensors while being asked tough questions, their heart rate and sweat levels monitored for signs of deception. But the polygraph has one major problem: it doesn’t actually detect lies. It detects stress — and stress can come from many sources.

Now, a new generation of truth-detecting tools is emerging, and it’s powered by Artificial Intelligence (AI).

What Makes AI Truth Detection Different?

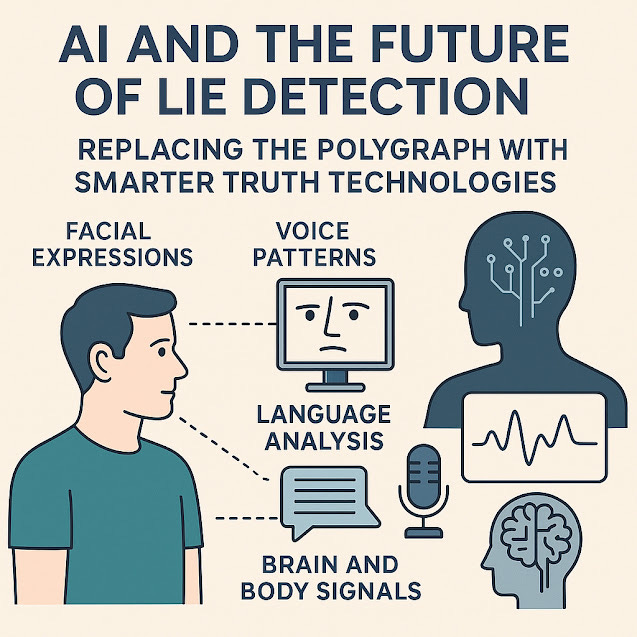

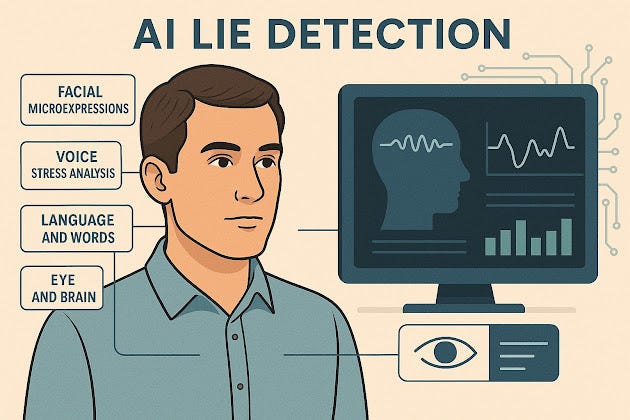

AI doesn’t just listen to your heartbeat or check if you’re sweating. It watches how your face moves, analyzes the tone of your voice, reads your words, and can even scan your brain activity. It combines multiple clues to build a fuller picture of what’s going on.

These systems don’t just guess — they analyze patterns that are hard for people to fake or hide. And they can do it in real time.

What AI Looks At

Here are the main areas AI uses to detect deception:

1. Facial Microexpressions

Tiny, involuntary facial movements can reveal hidden emotions — like fear, guilt, or stress. AI cameras can detect these microexpressions faster than the human eye.

2. Voice Stress and Tone

When we lie, our voices often change in subtle ways — such as slight tremors, pauses, or changes in pitch. AI listens for these clues.

3. Language and Word Patterns

AI systems analyze the words you use — whether your story is logical, detailed, or vague — and how it compares to truthful speech patterns.

4. Eyes and Brain

Some systems track your eye movement, pupil size, or even brainwaves using EEG. These indicators can show whether you're recognizing something you're trying to hide.

5. Multimodal Analysis

The most advanced systems combine all these inputs — face, voice, language, and bio-signals — for a complete analysis.

Where AI Lie Detection Is Being Used

Airports & Borders: Some countries are testing AI interview agents that talk to travelers and analyze their responses.

Police & Security: AI tools assist investigators by identifying stress or inconsistencies during interviews.

Hiring & Corporate Screening: For high-security roles, AI helps evaluate truthfulness in background checks.

Therapy & Trauma Research: AI helps detect when people are struggling to talk about painful truths.

Legal Systems: Some systems are being tested to support courtroom analysis (not as final judgment, but as a tool).

How It Compares to the Polygraph

Feature Traditional Polygraph AI-Based Lie Detection

What It Measures Heart rate, sweat, breathing Face, voice, words, brain, eyes

Accuracy Often questioned Higher (up to 90% in tests)

Can Be Fooled? Yes — by staying calm Harder to fool (multi-signal)

Real-Time Use No (requires operator) Yes — can be live or remote

Used in Court? Rarely Not yet — still experimental

Are There Risks?

Yes. With great power comes great responsibility. Truth-detecting AI raises important questions:

Privacy: Should machines analyze your face and voice without permission?

Consent: People must understand and agree to be analyzed.

Bias: AI must be trained on diverse people — across cultures, ages, and genders — to avoid unfair results.

Ethics: The goal is not to punish, but to understand. Machines should help humans, not replace them.

The Future of Truth Technology

AI won’t eliminate human judgment — and it shouldn’t. But it can support it. Just like a microscope helps us see the invisible, truth AI helps us see what’s hidden in speech, emotion, or behavior.

In the coming years, we may see AI lie detection used in more places — not just to catch people, but to protect the innocent, reduce bias, and build more honest systems.

The key is to use it wisely, transparently, and ethically.

Would you like this article turned into a blog post, infographic, or video script?

Here is the full standalone technical article focused entirely on AI-based truth detection systems, including system design, modalities, model types, applications, and ethical considerations:

Artificial Intelligence for Truth Detection: A Comprehensive Technical Framework

By Ronen Kolton Yehuda (Messiah King RKY)

Abstract

AI-driven truth detection systems are emerging as sophisticated alternatives to traditional polygraphs, offering real-time, multimodal assessment of human behavior during interrogation, interviews, and security screenings. These systems analyze facial microexpressions, voice characteristics, linguistic patterns, and physiological signals to assess truthfulness with high accuracy. This article presents the complete architecture of such systems — from sensor inputs and signal processing to machine learning models, fusion strategies, and ethical safeguards.

1. Introduction

Deception detection is crucial in criminal justice, counterintelligence, border control, high-risk hiring, and conflict resolution. While polygraphs rely on indirect signs of stress, AI offers direct behavioral analysis powered by computer vision, speech processing, biometric sensing, and natural language understanding.

This new class of systems can operate autonomously or alongside human interviewers to increase reliability, reduce bias, and provide detailed insight into the cognitive and emotional state of subjects under examination.

2. System Architecture Overview

An AI-based truth detection system consists of the following layered components:

2.1 Input Acquisition Layer

Visual: RGB/IR/depth cameras for facial expression, gaze, and microexpression tracking

Audio: Directional microphones to capture voice stress, pitch, tremor

Text: Real-time transcription of speech for NLP processing

Physiological (optional): EEG, heart rate variability (HRV), eye tracking, GSR

Environmental: Timestamp, context, question history

2.2 Signal Processing Layer

Face Processing: Action Unit (AU) encoding, head motion, blink rate, pupil dilation

Voice Processing: Extraction of MFCCs, jitter, shimmer, prosody, pause detection

Language Processing: Tokenization, syntax tree parsing, semantic embedding

EEG Processing: Filtering (e.g., ICA), ERP detection (e.g., P300), spectral entropy

Normalization: Aligns and scales data across subjects and sessions

3. Machine Learning Models

3.1 Vision and Expression Models

CNNs for spatial feature extraction

CNN-LSTM or 3D-CNNs for spatiotemporal facial dynamics

Pre-trained models: VGGFace, OpenFace, AffectNet-based pipelines

3.2 Voice Analysis Models

LSTM-RNNs, GRUs, or Transformer-based encoders to model sequential patterns in speech features

Spectrogram CNNs for emotion inference from audio signals

3.3 Language Models

Transformer architectures (BERT, RoBERTa, GPT-based) to analyze sentence structure, logical consistency, and emotional indicators

Deception-specific embeddings trained on annotated datasets (e.g., Real-Liars Corpus)

3.4 Physiological Models

CNN-LSTM or BiLSTM networks for EEG-based deception recognition

Event-Related Potential (ERP) detection for recognition-related deception (e.g., Guilty Knowledge Test using P300 signals)

4. Multimodal Fusion Strategies

4.1 Early Fusion

Concatenation of raw or preprocessed features across modalities

Suitable for synchronized signals (e.g., audio + facial expressions)

4.2 Late Fusion

Independent classifiers per modality, followed by:

Weighted averaging of probability outputs

Voting ensembles

Bayesian model averaging

4.3 Hybrid Fusion

Combines early and late techniques with meta-learners trained on modality-specific decisions

5. Training and Datasets

Datasets: Columbia Deception Corpus, SEMAINE, DAIC-WOZ, P300-based datasets, multimodal emotion databases

Data Augmentation: GANs and synthetic signal generation

Ground Truth: Obtained via controlled experiments, post-confession confirmation, or expert annotation

6. Deployment Modalities

Kiosk-based systems for automated interviews (e.g., airport security)

Embedded modules for online remote hiring, customer service, or telemedicine

Courtroom assistive tools for real-time analysis of testimony (advisory only)

Wearable kits for mobile intelligence, police use, and psychological evaluation

7. Performance Benchmarks

Metric Value Range (State of the Art)

Accuracy (multimodal) 85% – 95%

False Positive Rate 5% – 15%

Real-Time Latency < 500ms (per frame or input cycle)

Modality Robustness Medium–High

Scalability High (cloud or edge deployable)

8. Applications

Border & Airport Security: Autonomous deception checks using AI avatars

Investigative Interviewing: Police and military use to support human judgment

Corporate Risk Assessment: Insider threat detection and fraud screening

Mental Health: Detection of concealed trauma, PTSD, emotional suppression

Judicial Systems: AI assistants to detect inconsistencies or distress in real-time (under review in some jurisdictions)

9. Ethical and Legal Considerations

9.1 Privacy and Consent

Mandatory informed consent for biometric and neurophysiological monitoring

Real-time anonymization or encryption of sensitive data

9.2 Bias and Fairness

Cross-cultural model training

Regular fairness audits on outputs and datasets

Transparency in error rates by demographic category

9.3 Accountability and Oversight

AI outcomes should be advisory only unless reviewed by human experts

Creation of Ethical Oversight Boards to regulate misuse

Explainable AI (XAI) modules for public understanding of system decisions

10. Future Directions

Federated Learning for decentralized, privacy-preserving model training

AI Conscience Layer: Integrating fairness and logic checks before outputs

Real-Time Adaptation: Personal baseline calibration per subject

Global Ethical Standards: Shared governance models for implementation

Integration with Legal Systems: Establishing admissibility protocols and appeals

Conclusion

AI-based truth detection systems represent a significant technological advancement beyond traditional polygraphs. Through multimodal input, machine learning models, and real-time analysis, these systems offer enhanced accuracy, scalability, and fairness potential. However, their deployment must be handled with strict ethical frameworks, continuous validation, and human oversight. When responsibly developed, these systems could become foundational tools in building trust and resolving conflict across sectors.

Let me know if you'd like a version for PDF publication, journal submission formatting (IEEE, APA), or system diagram illustrations.

Here is a combined regular article covering both traditional polygraph use and modern AI-powered truth detection systems:

From Polygraphs to AI: The Evolution of Truth Detection Technology

By Ronen Kolton Yehuda (Messiah King RKY)

Introduction: The Human Need for Truth

In courtrooms, security checkpoints, job interviews, and intelligence investigations, the ability to detect lies has always held powerful appeal. For over a century, the polygraph — a machine that measures physiological responses — was considered the cutting edge of lie detection. But today, we are entering a new era.

Artificial Intelligence (AI) is revolutionizing how we detect deception, replacing subjective guesswork and stress-based signals with advanced, data-driven systems that learn from facial expressions, voice tone, language use, and even brain activity.

Part 1: The Traditional Polygraph

The polygraph monitors several physiological indicators:

Heart rate

Blood pressure

Respiration rate

Skin conductivity (sweating)

The assumption is that when a person lies, the stress causes measurable changes in these signals. While polygraphs have been used by law enforcement, intelligence agencies, and private investigators, they face major issues:

False positives: Nervous truth-tellers may fail the test

False negatives: Trained individuals can suppress signs of stress

Subjectivity: Interpreting results depends on the examiner

Legal rejection: Many courts do not admit polygraph results as evidence

Part 2: Enter AI – A New Approach to Lie Detection

Instead of relying only on physical signs of stress, AI truth detection systems take a broader, more holistic view. They analyze behavior, voice, expressions, language, and even neural signals.

Key AI Technologies in Lie Detection:

Facial Microexpression Analysis

AI can detect fleeting facial expressions that last less than a second, revealing stress, fear, or concealment.

Voice Stress Analysis

AI models can pick up changes in tone, hesitation, and vocal tremors — even subtle ones undetectable by humans.

Language and Text Analysis

Using Natural Language Processing (NLP), AI evaluates word choice, sentence structure, and narrative patterns for signs of deception.

Physiological and Brain Data

Advanced systems can read EEG brainwave data or biometric signals like eye movements and pupil dilation.

Multimodal Fusion

The most advanced systems combine multiple data sources — voice, face, words, and body signals — to form a comprehensive analysis.

Part 3: AI vs. Polygraph — Key Differences

Feature Traditional Polygraph AI-Based Lie Detection

Input Signals Heart rate, sweat, breath Voice, face, language, brain, etc.

Bias & Subjectivity High – examiner-dependent Low – consistent algorithms

Accuracy Often disputed (60–80%) Up to 90% with multimodal input

Speed Slow and manual Real-time or near real-time

Legal Use Often rejected Emerging – under development

Adaptability Fixed setup Can run on cameras, microphones, or kiosks

Applications of AI Truth Tech

Airports and borders: AI interviews travelers and flags suspicious behavior

Police interrogations: AI assists in evaluating suspect statements

Job screenings: AI flags inconsistencies or high-risk behaviors

Therapy and counseling: Detects suppressed trauma or emotional cues

Corporate and finance: Preventing fraud or internal security threats

Ethical and Legal Considerations

As powerful as AI-based lie detection is, it comes with risks:

Privacy: Should AI analyze your voice, face, or thoughts without consent?

Bias: Algorithms must be trained across diverse groups to avoid discrimination

Misuse: In authoritarian regimes, such tools could be abused for control

Oversight: Strong regulation and transparency are critical

Conclusion

We are at a crossroads. The polygraph, once hailed as a breakthrough, is being replaced by smarter, more accurate, and less intrusive AI-based technologies. But the mission remains the same: to find the truth. In this new era, we must use AI not just to detect lies, but to build systems that uphold fairness, ethics, and human dignity.

Would you like this turned into a visual article, infographic set, or formatted as a publication-ready PDF?

AI-Powered Truth Detection: Beyond the Polygraph

By Ronen Kolton Yehuda (Messiah King RKY)

Introduction

For decades, the polygraph — commonly known as the "lie detector" — has been the default instrument for gauging truthfulness based on physiological cues. But its reliability is widely contested, and its use is often inadmissible in court. In the era of artificial intelligence, we now have the opportunity to move beyond this flawed legacy. AI-powered truth detection systems represent a new frontier — one where psychology, neuroscience, machine learning, and ethics converge.

The Limitations of Traditional Polygraphs

Polygraphs rely on indirect physiological indicators such as heart rate, blood pressure, respiration, and skin conductivity. The premise is that deception causes stress, and stress produces measurable changes. But this assumption is deeply flawed:

False Positives: Nervous but truthful individuals may appear deceptive.

False Negatives: Sociopaths or trained individuals may suppress physiological responses.

No Universal Baseline: Individual differences make it hard to standardize results.

The polygraph measures anxiety, not deception — a crucial distinction.

The Rise of AI in Lie Detection

Artificial Intelligence provides new, more direct pathways to assess truthfulness. It can process vast, multi-modal data streams in real time, integrating behavioral, verbal, facial, neural, and biometric signals. Major developments include:

1. AI-Powered Facial Microexpression Analysis

Using computer vision and deep learning, AI can detect subtle facial expressions and involuntary muscle movements — indicators of concealed emotions or stress. These microexpressions often occur in less than 1/25 of a second and are invisible to the human eye.

2. Voice Stress Analysis

AI models trained on thousands of voice samples can identify micro-tremors, pitch changes, and timing irregularities that suggest cognitive dissonance or deceptive speech.

3. Neuroimaging and EEG Analysis

When combined with AI, EEG (electroencephalogram) and fMRI (functional MRI) scans offer more direct insights into the brain's responses. Techniques like P300 wave analysis can detect recognition responses when a subject is confronted with relevant stimuli.

4. Behavioral and Linguistic Pattern Detection

Natural Language Processing (NLP) enables AI to analyze written or spoken statements for logical inconsistencies, evasive phrasing, or overcompensation — all potential signs of deception.

Multimodal Integration

The most advanced systems integrate multiple channels:

Physiological data (heart rate, skin conductance)

Facial microexpressions

Voice patterns

Eye movements and blink rate

Brainwave patterns

Language content and structure

Using machine learning ensembles, these systems cross-validate findings from each modality, reducing false readings and increasing reliability.

Applications

Law Enforcement & Intelligence: Enhanced interrogation support without coercion.

Court Systems: Supplemental truth-checking in testimonies (with strict safeguards).

Employment & Clearance Vetting: Non-invasive integrity assessment.

Border Security: Real-time screening at airports or checkpoints.

Medical & Psychological Diagnostics: Identifying hidden trauma, phobias, or PTSD markers.

Ethical Concerns and Safeguards

With great power comes risk. AI truth detection raises serious ethical questions:

Privacy: Mental states are deeply personal.

Consent: Individuals must understand and agree to testing.

Bias: AI models must be trained on diverse datasets to avoid discrimination.

Misuse: Authoritarian regimes could weaponize truth tech for oppression.

To prevent abuse, transparent standards, oversight bodies, and appeal mechanisms must be built into any deployment.

The Future: Toward AI-Conscience Systems

The ultimate goal is not just to detect lies, but to understand truth. AI may soon evolve to become a "naive conscience" — neutral, ethical, and context-aware — able to assist in justice, therapy, diplomacy, and reconciliation.

In place of coercive interrogation, we may embrace collaborative AI interviewing — systems designed not to punish, but to understand.

Conclusion

Polygraphs belong to a bygone era. AI truth detection, while still developing, holds the promise of fairness, objectivity, and ethical accountability — if guided by the right principles. As we refine these technologies, we must ensure they serve humanity, not control it.

Here is the technical article version of the same topic, written in an academic tone with structured technical content:

AI-Powered Deception Detection Systems: Replacing the Polygraph with Multimodal Truth Analysis

By Ronen Kolton Yehuda (Messiah King RKY)

Abstract

This article presents an overview of artificial intelligence (AI)-based systems for deception detection as a superior alternative to conventional polygraphs. While polygraphs rely on peripheral physiological signals subject to psychological variation, AI enables integrated multimodal analysis, including facial microexpressions, vocal biomarkers, linguistic features, and neurological responses. We describe system architecture, component models, sensor types, data fusion techniques, and performance metrics, along with ethical considerations.

1. Introduction

The need for effective truth verification spans domains such as law enforcement, border control, corporate security, military intelligence, and therapeutic settings. Traditional polygraphs measure autonomic responses (e.g., galvanic skin response, heart rate, respiration), but lack objectivity and robustness.

AI-powered systems offer multimodal fusion of behavioral, physiological, acoustic, and cognitive data, resulting in higher accuracy, scalability, and adaptability. This shift reflects advancements in machine learning, sensor technology, and affective computing.

2. Limitations of Polygraphs

High False Positive Rate: Truthful individuals may experience stress due to interrogation setting.

Low Standardization: Results vary significantly by examiner skill.

Vulnerability to Countermeasures: Breathing control and muscle tension techniques can defeat polygraph indicators.

Lack of Cognitive Markers: Polygraphs cannot directly detect memory recognition or semantic inconsistency.

3. AI-Based Deception Detection Framework

3.1 System Overview

A typical AI deception detection system consists of:

Input Layer: Multimodal sensor array (visual, audio, biometric, cognitive)

Feature Extraction Modules: Using signal processing and deep learning

Model Ensemble: Specialized classifiers (CNNs, RNNs, transformers)

Decision Fusion: Bayesian or voting-based output integration

Result Interpretation Layer: Generates deception probability score or binary label

4. Key Modalities and Techniques

4.1 Facial Microexpression Recognition

Method: Use of Convolutional Neural Networks (CNNs) on high-frame-rate video

Features: Action Units (AUs), blink rate, eye movement, muscle tension

Tools: OpenFace, MediaPipe, FaceReader

4.2 Voice and Speech Analysis

Method: Spectral and prosodic feature extraction (e.g., MFCCs, pitch, jitter)

Models: LSTM, GRU, or Transformer-based classifiers for temporal patterns

Indicators: Pauses, hesitation, vocal tremor, pitch variability

4.3 Linguistic Analysis (NLP)

Method: Natural Language Understanding using models like BERT, RoBERTa

Features: Sentiment polarity, syntax inconsistency, lexical diversity, denial frequency

Tools: spaCy, NLTK, Hugging Face Transformers

4.4 Physiological and Neurological Signals

EEG-based: P300 detection for recognition-related stimuli

Eye Tracking: Fixation duration, pupil dilation

Wearable Sensors: GSR, heart rate variability (HRV), respiration via chest bands or rings

5. Data Fusion and Decision-Making

Early Fusion: Feature-level combination (e.g., concatenation of audio + visual vectors)

Late Fusion: Probability aggregation from individual models (weighted sum, majority vote)

Meta-Ensemble Learning: Secondary classifier trained on outputs of primary modalities

6. Evaluation Metrics

Accuracy: Up to 90% in controlled datasets (e.g., Real-Liars Corpus, Columbia D.D. set)

Precision/Recall: Critical in security applications

ROC-AUC: Assesses balance between false positives and negatives

Real-Time Latency: ~250–500ms inference per subject for practical deployment

7. Applications

Border Security: Real-time virtual interviewer for suspicious behavior detection

Judicial Pre-Screening: Supplementary tool in depositions and testimonies

Corporate Vetting: Interview integrity analysis

Therapeutic Diagnostics: Trauma or memory recall validation

8. Ethical and Legal Considerations

Privacy: Handling of biometric and cognitive data must comply with GDPR and local laws

Consent: Must be informed, revocable, and explicitly recorded

Bias Mitigation: Require diverse training data across age, gender, ethnicity

Auditability: Transparent model decisions and human-overridable outcomes

9. Challenges and Future Work

Cross-Cultural Generalization: Behavior varies across populations

Adversarial Robustness: Defense against deceptive actors trained to spoof sensors

Explainability: Making black-box AI decisions interpretable

Integration with Human Decision-Making: Augmenting, not replacing, investigators

Conclusion

AI-based deception detection systems represent a paradigm shift beyond traditional polygraphs. By harnessing multimodal analysis, machine learning, and ethical design, these systems offer a scalable, data-driven approach to truth verification. Continued innovation must be paired with strict governance to ensure responsible and fair use.

Let me know if you'd like a visual architecture diagram or to publish this in academic format (PDF, LaTeX, or journal template).

AI-Based Multimodal Deception Detection Systems: A Technical Overview

By Ronen Kolton Yehuda (Messiah King RKY)

Abstract

Traditional polygraph systems rely on physiological metrics to infer deception, but they suffer from limited accuracy, high subjectivity, and susceptibility to manipulation. This article explores the development of AI-powered, multimodal deception detection systems that integrate computer vision, voice analysis, neuroimaging, and behavioral modeling to produce more reliable, automated, and scalable truth verification technologies.

1. Introduction

Polygraphs measure indirect symptoms of cognitive stress (e.g., skin conductivity, heart rate) rather than detecting lies directly. Recent advances in AI, sensor fusion, and real-time signal processing enable a new class of systems that use data-driven models to classify truthfulness with higher precision.

2. Core Architecture

An AI-based deception detection system is typically built using the following modular components:

2.1 Signal Acquisition

Visual Sensors: RGB, IR, and depth cameras for facial and ocular tracking

Audio Sensors: High-fidelity microphones capturing speech and microtremors

Bio-Sensors: Heart rate monitors, galvanic skin response (GSR), electromyography (EMG)

Brainwave Interfaces: EEG or fNIRS devices (optional in clinical or high-security use)

2.2 Preprocessing Pipelines

Noise filtering (e.g., bandpass filters, artifact rejection in EEG)

Signal normalization and alignment

Facial landmark extraction and action unit encoding (via OpenFace, MediaPipe, etc.)

Voice signal feature extraction (MFCCs, jitter, shimmer, energy dynamics)

3. AI Models Used

3.1 Computer Vision Models

CNNs (e.g., ResNet, MobileNet) trained on datasets of microexpressions

LSTM-based RNNs for temporal modeling of facial cues

Attention Networks for anomaly detection in face/eye dynamics

3.2 Speech & Voice Stress Analysis

Spectrogram-based CNNs for stress detection

Temporal Convolutional Networks for detecting vocal inconsistencies

NLP Transformers (e.g., BERT) for semantic and syntactic analysis of verbal responses

3.3 EEG Signal Interpretation (for advanced deployments)

ERP (Event-Related Potential) classifiers — e.g., detection of P300 waves

CNN-LSTM hybrids for multi-channel EEG classification

ICA (Independent Component Analysis) for source separation

3.4 Ensemble Classifiers

Final decision is made via late fusion or Bayesian model averaging, integrating:

Visual-based deception probability

Acoustic-based deception probability

Physiological-based deception probability

Cognitive-based deception probability (if EEG/fMRI used)

4. Training and Datasets

Training Data: Multimodal truth/lie datasets (e.g., Deception Detection Toolkit, Real-Liars Corpus, SEMAINE, DAIC-WOZ)

Annotation: Expert-labeled video and audio clips; ground truth from confessions or verified data

Augmentation: Synthetic data generation using GANs for underrepresented emotion states

5. Performance Metrics

Accuracy: Typically 80–95% depending on modality combination and context

False Positive/Negative Rate: Tuned through calibration layers

AUC/ROC Scores: Used for model validation

Latency: Optimized for real-time inference (< 500ms per frame/segment)

6. Applications

Border Security: Real-time lie detection during automated interviews

Law Enforcement: Supplementing human interrogations with analytical models

HR & Insider Threat Prevention: Integrity assessments during hiring or investigation

Therapy and PTSD Diagnostics: Detection of suppressed traumatic responses

High-Security Access: Cognitive authentication protocols

7. Limitations and Risks

Bias in Training Sets: Cultural and individual expression differences can skew accuracy

Privacy and Consent: Brainwave and facial data are sensitive; ethics protocols required

Robustness: Environmental noise and occlusions (e.g., masks, glasses) affect reliability

Legal Admissibility: Varies by jurisdiction; system output often advisory, not conclusive

8. Future Directions

Integration with blockchain for secure logging of interaction data

Personal calibration modules to reduce baseline variability

Federated learning for private model adaptation

Embodied deployment in humanoid AI interviewers

Naive AI modules as ethical arbitration layer for AI-deployed truth techs

Conclusion

AI-powered deception detection offers a more advanced, accurate, and scalable approach to truth verification compared to traditional polygraphs. With proper safeguards, such systems could become vital tools across security, legal, psychological, and corporate domains. However, careful consideration of ethics, privacy, and deployment context is critical to ensure responsible use.

Here is a regular article version for broader audiences:

AI and the Future of Lie Detection: Replacing the Polygraph with Smarter Truth Technologies

By Ronen Kolton Yehuda (Messiah King RKY)

Why Lie Detection Matters

From crime investigations and airport security to job screenings and courtrooms, the ability to detect lies and uncover the truth has always been a powerful tool. For more than a century, the polygraph — often called a "lie detector" — has been used to measure physical signs of stress (like heart rate and sweating) to determine if someone is being dishonest.

But there’s a problem: polygraphs aren’t always accurate. Nervous people can appear guilty. Trained liars can fool the machine. And in many countries, polygraph results aren't even accepted in court.

That’s where Artificial Intelligence (AI) steps in — offering a new generation of smarter, faster, and more reliable truth detection technologies.

How AI Is Changing Truth Detection

AI doesn’t rely on just one signal like a heartbeat or sweat. It can combine and analyze many types of data all at once — including how we speak, how our face moves, how we blink, and even how our brain responds to certain questions.

Here are a few of the ways AI can detect lies:

1. Facial Expressions

AI systems can track tiny, fast facial movements (called microexpressions) that show when someone is hiding emotions or under stress — even if they're trying to stay calm.

2. Voice Patterns

AI listens for changes in your voice: tone, pitch, speed, hesitation, and even muscle tremors in your throat. These clues can signal when someone is nervous or not telling the whole truth.

3. Language Analysis

Advanced language models can study the words people use — detecting over-explaining, contradictions, or vague answers that often show up in deceptive speech.

4. Brain and Body Signals

In high-security environments, AI can even work with devices like EEG (to measure brain activity) or skin sensors. Some systems use this to spot recognition or stress reactions when people are shown certain images or asked specific questions.

What Makes AI Lie Detection Better?

Faster: AI can analyze multiple signals in real time — perfect for live interviews or airport screenings.

More Accurate: By combining different types of data, AI reduces the chance of false positives or negatives.

Less Human Bias: AI systems can be trained to be fair and consistent, avoiding human errors or personal judgments.

Scalable: AI systems can be installed in kiosks, cameras, or virtual interviews — helping screen many people at once.

Where It’s Being Used

AI truth detection isn’t science fiction. It’s already being tested or used in:

Border control systems that ask travelers questions and analyze their responses

Law enforcement as a tool to support investigations

Security clearance screenings for sensitive jobs

Therapy and healthcare to detect hidden trauma or stress

Corporate environments during conflict resolution or fraud investigations

Risks and Ethics

Of course, with such powerful technology, there are serious concerns:

Privacy: Should a machine be allowed to read your face, voice, or thoughts without permission?

Accuracy: AI is only as good as the data it’s trained on — and it must work for all cultures and languages.

Abuse of Power: If used incorrectly, governments or companies could use this tech to control or intimidate people.

Legal Questions: Should AI results be allowed in court or police cases? That depends on fairness and transparency.

The Path Forward

AI truth technology will never be perfect — but it doesn’t need to be. Instead, it should be used carefully, ethically, and as a tool to support human judgment, not replace it. When guided by principles of justice and transparency, AI can help us build a more honest world.

The polygraph may be fading — but the future of lie detection is smarter, deeper, and more responsible. And it's powered by artificial intelligence.

Thank you — here’s a full regular article that includes both the traditional polygraph and the new AI-based systems, presented clearly and accessibly:

Polygraphs and AI: The Past and Future of Lie Detection

By Ronen Kolton Yehuda (Messiah King RKY)

The Timeless Human Desire for Truth

Whether in police investigations, border security, courtroom testimonies, or hiring processes, people have long searched for reliable ways to detect lies. The question is simple: Can we know when someone is telling the truth?

For over a century, one tool dominated the answer: the polygraph.

Now, a new generation of technology is emerging — powered by Artificial Intelligence (AI) — promising faster, smarter, and possibly more accurate methods of truth detection.

This article compares both: the traditional polygraph and the new AI-based deception detection systems — how they work, where they are used, and what the future might hold.

What Is a Polygraph?

The polygraph, also called a lie detector, measures physical signs of stress during questioning:

Heart rate

Breathing rate

Blood pressure

Skin conductivity (sweat levels)

The idea is that lying causes emotional stress, and this stress creates physical changes that the machine can detect.

But here’s the problem:

Polygraphs don’t actually detect lies. They detect stress — and stress can come from many things, including fear, trauma, or simply being accused.

Weaknesses of the Polygraph

False Positives: Nervous but honest people may “fail”

False Negatives: Trained or calm liars may “pass”

Inadmissibility: Courts in many countries do not accept polygraph results as hard evidence

Human Interpretation: Results depend on the skill and judgment of the examiner

Despite these weaknesses, polygraphs are still widely used in law enforcement, intelligence, and employment screening — especially for high-security jobs.

Enter AI: A New Way to Detect Deception

Today, artificial intelligence brings a new approach. Instead of just measuring stress, AI looks at behavior, speech, facial expressions, language, and even brain activity.

These systems are called AI-powered deception detection systems, and they are already being tested in airports, border control stations, police interviews, and even in business.

What Can AI Analyze?

Facial Microexpressions

Small, involuntary facial movements — sometimes lasting less than a second — can reveal hidden emotions like guilt, fear, or deception.

Voice and Speech

AI listens for subtle voice changes, hesitation, pitch shifts, or patterns that may indicate lying or emotional conflict.

Language and Words

Natural Language Processing (NLP) tools can analyze how people speak — including their choice of words, logical consistency, and emotional tone.

Eye and Body Signals

Eye movement, blinking, and pupil dilation are also valuable. Some systems even use brainwave (EEG) or biometric sensors to detect recognition or stress.

Combined Multimodal Analysis

The best AI systems use multiple data sources at once — making a full picture of behavior and analyzing it in real time.

Comparing Polygraph and AI-Based Systems

Feature Traditional Polygraph AI-Based Truth Detection

Focus Physical signs of stress Behavior, language, expression, sound

Accuracy Often questioned High in early tests (up to 90%)

Operator Bias Human-dependent Algorithmic, though still trained

Speed Manual process Real-time or near real-time

Legal Acceptance Limited use in courts Emerging – under ethical debate

Use of Data Heart/sweat/breath only Voice, face, language, biometric, EEG

Where Both Are Used

Use Case Polygraph Use AI Use (Current or Experimental)

Police/Forensics Common for suspects Used in interviews and court evaluation

Border Security Rare or slow AI virtual agents for screening

Intelligence Clearance screenings Insider threat detection

Hiring Rare and controversial Screening in sensitive roles

Mental Health Not used Emotion recognition and trauma analysis

Ethical Questions for the Future

These systems raise serious and important questions:

Can people give true consent for these tests?

What happens if AI makes a wrong judgment?

How do we prevent abuse or misuse by authorities?

Can AI truly understand culture, context, and human emotion?

Like the polygraph before it, AI is not perfect — but it may offer a more complete and fair analysis, if it is used with ethics, transparency, and human oversight.

Final Thoughts

We are moving from a world where truth detection focused only on the body — to a world where machines can analyze faces, voices, words, and minds.

But truth isn’t just about technology. It’s about fairness, dignity, and respect.

AI may be smarter than the polygraph, but it must also be wiser — and serve justice, not judgment.

Here is a technical article combining both polygraph systems and AI-based deception detection in a scholarly format:

From Polygraph to Multimodal AI: A Technical Examination of Lie Detection Technologies

By Ronen Kolton Yehuda (Messiah King RKY)

Abstract

Lie detection is a critical component in domains such as criminal investigation, intelligence, border control, and high-security employment. Traditional polygraph systems rely on autonomic physiological signals to infer deception but suffer from limitations in accuracy, interpretability, and legal validity. Recent advances in artificial intelligence (AI) have led to the development of multimodal deception detection systems that integrate facial, vocal, linguistic, and biometric data to improve reliability. This article provides a comparative technical analysis of polygraph technology and AI-powered systems, focusing on architecture, modalities, signal processing, classification models, accuracy, and deployment challenges.

1. Introduction

The desire to objectively detect deception has motivated technological development for over a century. The polygraph, invented in the early 20th century, was one of the first systematic tools to infer lying behavior from physiological signals. However, due to issues such as high false positive rates, susceptibility to countermeasures, and poor legal standing, the polygraph's utility is limited.

Emerging AI-based lie detection systems offer a more robust alternative. By fusing multimodal behavioral data with machine learning, these systems move beyond indirect stress markers toward cognitive and behavioral pattern recognition.

2. Polygraph Technology Overview

2.1 Signal Acquisition

Polygraphs typically record the following signals:

Electrodermal Activity (EDA) – skin conductivity

Cardiovascular Activity – heart rate and blood pressure

Respiration Rate – chest/belly expansion via pneumatic tubes

2.2 Hypothesis

The polygraph assumes that deception produces stress, which causes measurable changes in autonomic signals. However, this hypothesis lacks universality, as emotional and situational variables can produce similar effects in truthful subjects.

2.3 Technical Limitations

No direct measurement of cognitive conflict or recognition

Susceptible to intentional countermeasures (breathing control, muscle tensing)

Results interpretation is examiner-dependent and lacks standardization

Not admissible in many legal systems

3. AI-Based Deception Detection Systems

3.1 System Architecture

AI-based systems are built around multimodal analysis combining:

Visual Data (e.g., facial expressions, eye movement)

Audio Data (e.g., voice tone, pitch, and hesitations)

Text/Linguistic Data (e.g., word choice, sentence structure)

Biometric Data (e.g., EEG, heart rate variability)

Each modality is processed independently and fused at either feature level (early fusion) or decision level (late fusion).

3.2 Facial Microexpression Recognition

Captured using high-frame-rate RGB/IR cameras

Feature extraction via facial Action Units (AUs)

Models: CNN, CNN-LSTM, 3D-CNN, YOLO-Face

Tools: OpenFace, Affectiva, Dlib, MediaPipe

3.3 Voice Stress and Acoustic Analysis

Feature extraction: MFCCs, jitter, shimmer, energy contours

Classification models: SVM, GRU, BiLSTM, Transformers

Analysis of speech tempo, pauses, and tremors indicating cognitive load

3.4 Natural Language Processing (NLP)

Features: syntactic complexity, over-specification, negation, evasion

Models: BERT, RoBERTa, GPT-like attention mechanisms

Applications: interview analysis, written deception detection

3.5 Neurophysiological Integration (Optional)

EEG: P300 responses indicating recognition of key stimuli

Eye-tracking: pupil dilation, fixation metrics

Tools: Emotiv, NeuroSky, Tobii eye trackers

4. Signal Processing and Model Training

4.1 Data Preprocessing

Signal denoising (Butterworth filters, ICA for EEG)

Normalization and alignment across time series

Data augmentation via GANs or adversarial simulation

4.2 Training Data

Datasets: Real-Liars Corpus, Columbia Deception Corpus, P300 datasets

Ground-truth labeling via confession, expert annotation, or event-based design

Consideration of ethical and privacy constraints in biometric data use

5. Decision Fusion and Output

Early Fusion: Combined feature vector across modalities

Late Fusion: Aggregated decision scores from independent models

Ensemble Voting or Bayesian Averaging for final prediction

Output: binary label (truth/lie), deception probability score, or trust ranking

6. Performance Comparison

Metric Traditional Polygraph AI-Based Multimodal Systems

Primary Inputs Autonomic signals Face, voice, language, EEG, etc.

Accuracy 60–80% (widely variable) 80–95% (in structured tests)

Real-Time Capability No Yes (≤500ms inference possible)

Interpretability Low (subjective) Medium-High (with explainable AI)

Legal Admissibility Limited Emerging (not yet standardized)

Countermeasure Resistance Low Medium–High

7. Ethical Considerations

Privacy: Protection of biometric and neurocognitive data

Consent: Must be informed and revocable

Bias: Training must ensure cultural and demographic fairness

Accountability: Human oversight and audit trails are essential

8. Future Directions

Hybrid Polygraph-AI Systems: Combining physiological and behavioral AI

Federated Learning: Privacy-preserving training on sensitive datasets

Explainable AI (XAI): Enhancing legal and institutional transparency

Wearable Integration: Deployment in mobile, real-time applications

Ethical Governance Models: Institutions for oversight and approval

Conclusion

Polygraphs, though historically important, are no longer the gold standard for lie detection. AI-based deception systems offer a powerful, multidimensional approach capable of operating in real time and providing richer behavioral insights. Their accuracy and robustness are superior — but their ethical implementation remains a work in progress. The future of truth verification lies not in abandoning the polygraph entirely, but in integrating and evolving its foundation through intelligent systems.

Let me know if you'd like a formatted version (LaTeX, PDF), a visual system diagram, or publication assistance.

Polygraphs and AI: The Past and Future of Lie Detection

By Ronen Kolton Yehuda (Messiah King RKY)

The Timeless Human Desire for Truth

Whether in police investigations, border security, courtroom testimonies, or hiring processes, people have long searched for reliable ways to detect lies. The question is simple: Can we know when someone is telling the truth?

For over a century, one tool dominated the answer: the polygraph.

Now, a new generation of technology is emerging — powered by Artificial Intelligence (AI) — promising faster, smarter, and possibly more accurate methods of truth detection.

This article compares both: the traditional polygraph and the new AI-based deception detection systems — how they work, where they are used, and what the future might hold.

What Is a Polygraph?

The polygraph, also called a lie detector, measures physical signs of stress during questioning:

Heart rate

Breathing rate

Blood pressure

Skin conductivity (sweat levels)

The idea is that lying causes emotional stress, and this stress creates physical changes that the machine can detect.

But here’s the problem:

Polygraphs don’t actually detect lies. They detect stress — and stress can come from many things, including fear, trauma, or simply being accused.

Weaknesses of the Polygraph

False Positives: Nervous but honest people may “fail”

False Negatives: Trained or calm liars may “pass”

Inadmissibility: Courts in many countries do not accept polygraph results as hard evidence

Human Interpretation: Results depend on the skill and judgment of the examiner

Despite these weaknesses, polygraphs are still widely used in law enforcement, intelligence, and employment screening — especially for high-security jobs.

Enter AI: A New Way to Detect Deception

Today, artificial intelligence brings a new approach. Instead of just measuring stress, AI looks at behavior, speech, facial expressions, language, and even brain activity.

These systems are called AI-powered deception detection systems, and they are already being tested in airports, border control stations, police interviews, and even in business.

What Can AI Analyze?

Facial Microexpressions

Small, involuntary facial movements — sometimes lasting less than a second — can reveal hidden emotions like guilt, fear, or deception.

Voice and Speech

AI listens for subtle voice changes, hesitation, pitch shifts, or patterns that may indicate lying or emotional conflict.

Language and Words

Natural Language Processing (NLP) tools can analyze how people speak — including their choice of words, logical consistency, and emotional tone.

Eye and Body Signals

Eye movement, blinking, and pupil dilation are also valuable. Some systems even use brainwave (EEG) or biometric sensors to detect recognition or stress.

Combined Multimodal Analysis

The best AI systems use multiple data sources at once — making a full picture of behavior and analyzing it in real time.

Comparing Polygraph and AI-Based Systems

Feature Traditional Polygraph AI-Based Truth Detection

Focus Physical signs of stress Behavior, language, expression, sound

Accuracy Often questioned High in early tests (up to 90%)

Operator Bias Human-dependent Algorithmic, though still trained

Speed Manual process Real-time or near real-time

Legal Acceptance Limited use in courts Emerging – under ethical debate

Use of Data Heart/sweat/breath only Voice, face, language, biometric, EEG

Where Both Are Used

Use Case Polygraph Use AI Use (Current or Experimental)

Police/Forensics Common for suspects Used in interviews and court evaluation

Border Security Rare or slow AI virtual agents for screening

Intelligence Clearance screenings Insider threat detection

Hiring Rare and controversial Screening in sensitive roles

Mental Health Not used Emotion recognition and trauma analysis

Ethical Questions for the Future

These systems raise serious and important questions:

Can people give true consent for these tests?

What happens if AI makes a wrong judgment?

How do we prevent abuse or misuse by authorities?

Can AI truly understand culture, context, and human emotion?

Like the polygraph before it, AI is not perfect — but it may offer a more complete and fair analysis, if it is used with ethics, transparency, and human oversight.

Final Thoughts

We are moving from a world where truth detection focused only on the body —

Certainly! Here is another regular article version of the AI truth detection system, written in a clear, engaging style for general readers:

Truth Machines: How Artificial Intelligence Is Redefining Lie Detection

By Ronen Kolton Yehuda (Messiah King RKY)

The Search for Truth — Upgraded

For over 100 years, we've used machines to help uncover lies. The polygraph, once seen as a breakthrough, works by measuring signs of physical stress — such as changes in heart rate, breathing, and sweat. The assumption? When people lie, they get nervous.

But as many experts agree, being nervous isn’t the same as being dishonest. Truthful people can fail lie detector tests. Skilled liars can pass them.

That’s why researchers and tech companies have started building something better: AI-powered lie detection systems that go beyond stress to analyze behavior, language, and emotion — all at once.

How AI Detects Lies Differently

Instead of looking only at your body’s reactions, AI systems use multiple types of information (called modalities) to assess truthfulness. Here's what that includes:

1. Your Face

Tiny facial movements — like a raised eyebrow or tight lips — can appear when someone is hiding something. These microexpressions happen so quickly that humans miss them. AI can catch them instantly.

2. Your Voice

Your tone, speed, hesitation, and breathing patterns change under pressure. AI listens for vocal stress patterns that may indicate deception.

3. Your Words

AI can process how people speak — spotting contradictions, vague answers, or overly detailed stories that might suggest someone is lying.

4. Your Eyes and Brain

Advanced systems can track eye movement, blink rate, or even use brainwave sensors (EEG) to detect whether a person recognizes key information — a method used in memory-based lie detection.

Real-World Use Cases

AI lie detection is no longer a theory. It’s already being tested and applied in various settings:

Airports and Borders: AI-powered virtual agents ask travelers questions and assess their responses in real time.

Law Enforcement: Investigators use AI tools during interviews to flag possible deception.

Hiring: Some companies use AI to help evaluate candidates for high-trust roles.

Therapy: AI may help detect when patients are avoiding or struggling to share painful memories.

Security Clearance: Used in sensitive government or military screenings.

Why It’s More Reliable Than the Polygraph

Feature Polygraph AI Lie Detection

What it detects Stress (heart rate, sweat, etc.) Behavior, voice, emotion, cognition

False positives Common in nervous people Less likely (uses many data points)

Can it be fooled? Yes, with training Very difficult to manipulate

Used in court? Rarely accepted Not yet, but under discussion

Operator bias High (human interpretation) Low (machine-based, consistent)

What About Ethics and Privacy?

As promising as these tools are, they raise serious concerns:

Consent: People must agree to be analyzed.

Bias: AI must be trained on diverse populations to avoid unfair outcomes.

Misuse: In the wrong hands, truth detection could be used to intimidate or control.

Accuracy: No system is perfect — human review should always be part of the process.

AI can help reveal the truth, but it must not be used to replace judgment or justice.

Looking Ahead

The dream of a machine that knows when someone is lying is becoming reality. But it’s not about catching people — it’s about building fairer, smarter, and more reliable systems for security, justice, and society.

AI can help us get closer to the truth — but only if we use it with care, respect, and responsibility.